Config Management

This blog post accompanies my DH-Tech talk about config management. tl;dr: There is a recording available.

Problems with infrastructure administration

Operating an infrastructure can be hard. Among the most common problems is that of reproducibility, i.e.

- Is the documentation both current and correct?

- Is it clear how to do a fresh install or set up a secondary system?

Another is that of uniformity, i.e. are there any standards?

- Is

ntpinstalled everywhere and configured correctly? - Do we have the same

SSLciphers everywhere? - Is

SSHpossible somewhere with (default) password orDSAkey?

Finally, the state of the systems diverges with time away from documentation/manual or the “twin” system, causing configuration drift.

This leads to problems such as a flawless upgrade in the test system only to totally break the production instance when doing excalty the same.

Solutions

One possibility is the adoption of configuration management tools.

They allow automatic configuration of systems by realising the desired configuration statte through software. Ideally this makes all changes traceable.

On the plus side, this enables the transfer of configuration between different systems by comparing their defined states. It also becomes very easo to scale single changes to many different servers. By reducing drift, compliance with internal and external policies can be ensured.

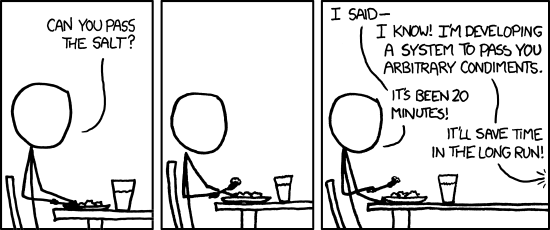

On the other hand, it brings with itself the need to completely rethink system administration. As an admin, you will basically have to learn a new programming language. Using this, making cingle one line changes can be cumbersome.

There are a number of solutions available, such as

- Cfengine (1993 – GPLv3)

- Puppet (2005 – Apache 2.0)

- Chef (2009 – Apache 2.0)

- Salt (2011 – Apache 2.0)

- Ansible (2012 – GPLv3+)

Many of these have commercial options available. You may want to read James’ revisionist history post to learn more about them.

Puppet

At FE and DARIAH-DE in particular, we use Puppet.

Its principle is the (partial) declaration of system state through declarative »manifest« which than get compiled into a »catalog« and applied to the system. This is usually done in a client-server architecture.

This approach proves a high degree of abstraction. The manifests control users, packages and services, whether you manage Debian, SLES or RHEL distributions.

Some examples might be

file { '/etc/nologin':

ensure => file,

mode => '0644',

content => 'This is not the server you are looking for!',

}

if ($facts['os']['name'] == 'Ubuntu') {

package { 'landscape-common':

ensure => absent,

}

}

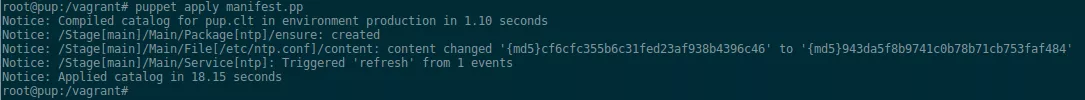

A basic example for ntp would be

package { 'ntp':

ensure => installed,

}

file { '/etc/ntp.conf':

content => template('ntp.conf'),

notify => Service['ntp'],

}

service { 'ntp':

ensure => running,

enable => true,

}

which, when applied, will have the following effect:

New Scenarios

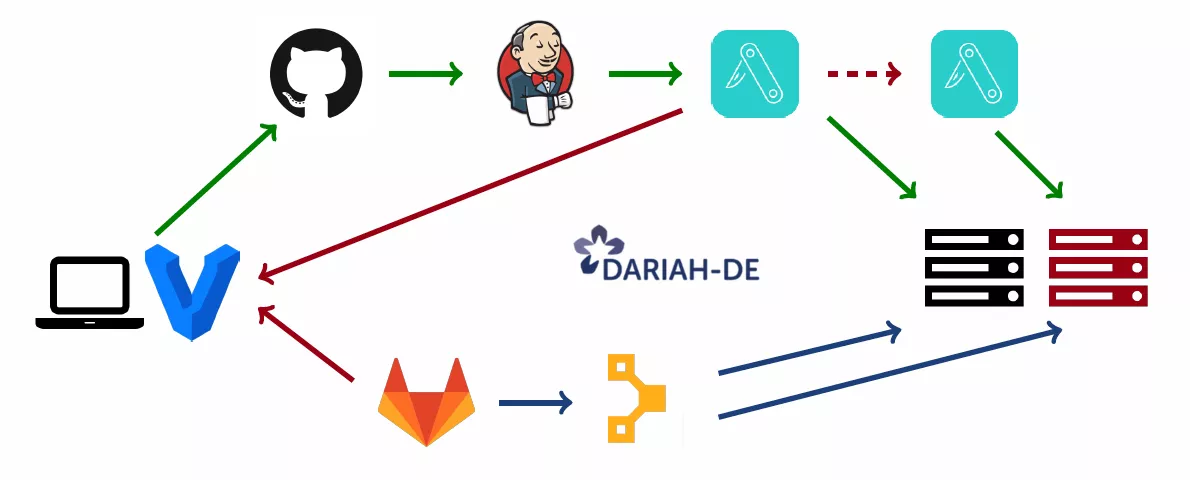

Combined with CI/CD-Pipelines, this can enable fully automated deployes.

At DARIAH-DE, whenever the developer pushes to GitHub, we trigger Jenkins build that creates a Debian package that’s put into our aptly repository. This gets installed on the staging server in the next run of puppet. Using the same puppet code from our internal GitLab and the pre-built packages, the developer can automatically build a Vagrant VM locally mirroring the real system. Once everyone is satisfied, by manually transferring the package to the production repo, the same puppet code deploys the accepted version to the production server.

Infrastructure as code

Once the infrastructure is managed through puppet code, system administration can be treated as software development. This includes the adoption of methods of agile software development from version control through pull requests, code review, tickets all the way through to testing and CI.

Thus the boundaries between Development and Operations disappear, hence the term DevOps. I gave a talk about our implementation a while ago at FrosCon.

On the other hand, this code base needs maintenance and refactoring just like any other. Yet in contrast to manually managed systems, it can be treated like any other technical debt from the software engineering side.

Where to start

To start out with automation, choose known services and process first. In particular, start with recurring and tedious tasks but don’t re-invent the wheel. There are off-the-shelf modules available for just about anything.

More concretely, you might want to start with things like

ntp- accounts, SSH keys

- monitoring / metrics

- logging

-

sshd, firewall

Just pick something simple and build from there. However, never do it only half-way, i.e. automating the installation but not the configuration. Also, never do something manually now and automate it tomorrow.

Finally take care when automating SSH & firewall as mistakes can lock you out. Take particular care when automating backup restores! (Hint: just don’t!)

Some more thought should be applied managing secrets.

All of this takes lots of time.

Lessons learned

Developers don’t want to care about ntp and firewall configuration.

They want to change their applications’ settings.

At the same time, not every admin is an expert for SSL/SSH ciphers and key exchange algorithms.

Sharing and distributing administration responsibilities allows to share these burdens. (another topic I recently gave a talk about)

SSL ciphers, firewalls, etc. are not trivial.

People manage (their) components on all (relevant) machines.

Security is important – before there is a single user!

Some usual quotes are

“I am afraid of automation – I never know what it will do to the system while I’m away.”

“It’s too early for that, we are still developing and can’t possibly decide on where to put the config file already.”

“I’m not a developer, I can’t use

gitand GitHub!”

“I don’t know how to code well, so I’m way too embarrassed to share anything!”

“If we publish our config, everyone will know how to hack us!”

Benefits for us

- Reproducibility and defined state (provenance).

- Deployment lead time massively reduced.

- Any config management is better than hand-crafted snowflakes.

- Harmonisation of infrastructure.

- Good starting point for new projects.

- Sharing expertise to collaborative build something.

New Challenges

While codifying infrastructure has many advantages, it doesn’t replace documentation! And while it may be easy to adapt snippets for new use cases, regular refactoring is necessary yet time-consuming.

By introducing a lot of new moving parts through the automation pipelines, the overall system complexity increases drastically. Some software can be hard to automate, but conversely it can be beneficial to take this into consideration when developing yourself.

Human aspects

While there are good reasons never to touch a running system, sometimes it can be necessary. Just remember to take everyone with you.

In particular changes to roles can be challenging, when admins find themselves doing development now and developers take care of production systems.

While it takes work. – Constantly and persistently, it can be worthwhile.

“The bug is fixed in staging, and we have half an hour before the big workshop – Let’s deploy to production!”

Introductory material to puppet, used internally at FE is available to play with.

10.59350/hp9nq-64953

10.59350/hp9nq-64953